End-of-year SOLs will be released this summer and are much anticipated to see how well kids are recovering from enforced shutdowns during COVID.

Some readers know the current testing system like the backs of their hands. But nearly all of us took standardized tests in school in which everyone took the same tests with the same questions.

That is not how math and reading SOL tests are designed now in Virginia.

Computer Adaptive Tests (CAT) – the link provides more detail than I will here – use AI algorithms to personalize the test for each student. SOLs have been computer adaptive for more than a decade.

How a student responds to a question determines the difficulty of the next item. A correct response leads to a more difficult item, while an incorrect response results in the selection of a less difficult item for the student.

CATs more completely test the level of knowledge of each student, not continuing with test questions which are either too easy or too hard for that particular student.

Important changes were made for the tests taken in Spring of 2023.

Based upon legislation in 2021, Spring 2023 tests administered questions on grade level, one level up or one level down depending upon the student’s progress through the earlier parts of the exam.

That seems an improvement, offering a more thorough measure of student learning and potentially being more engaging for each student.

The math and reading blueprints for 2023 SOLs contain information for two types of tests, the online computer adaptive test (CAT) and the traditional test.

A passage-based CAT is a customized assessment where each student receives a unique set of passages and items.

This is in contrast to the traditional test in which all students who take a particular version (paper, large print, or braille) of the test receive the same passages and respond to the same test questions.

All online versions of the Reading and Math Standards of Learning (SOL) test (including audio) are computer-adaptive. Paper tests are given only by exception under specific criteria.

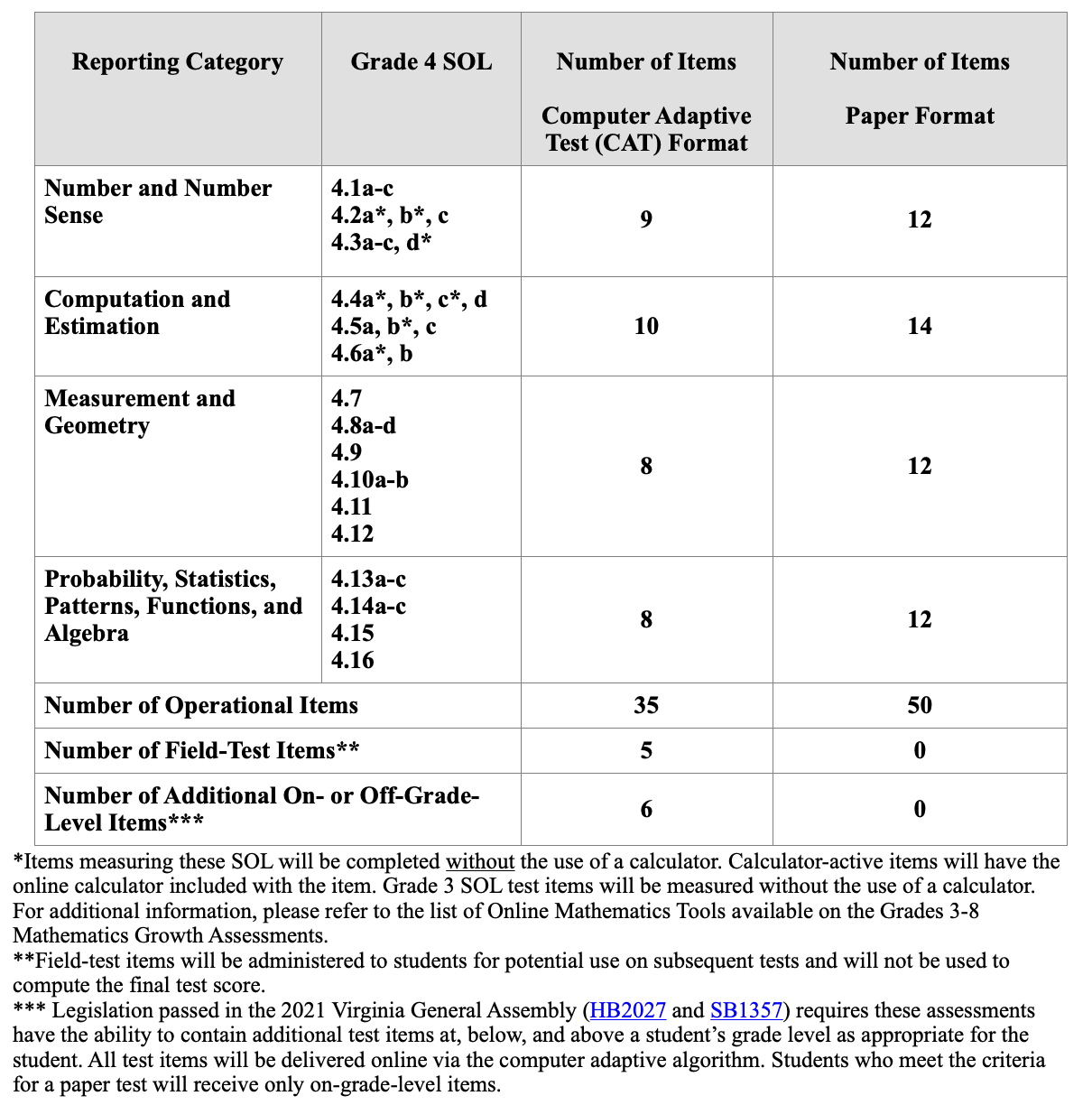

As example of the change, see the Grade 4 Math test blueprint new for 2023:

Beginning in spring 2023, the computer adaptive Standards of Learning tests will include a section of additional items at the end of the test.

The computer algorithm may deliver items one grade level above or one grade level below a student’s current grade based upon the student’s responses to the on-grade-level items.

The Test Scaled Score (0 to 600) and corresponding performance level (i.e., pass/proficient, pass/advanced, fail/basic, fail/below basic) are based upon a student’s performance on the on-grade-level Operational Items only.

The student’s responses to the on-grade-level Operational Items and the Additional Items that may be on grade level, one grade level above, or one grade level below the current grade level will be reflected in the student’s Vertical Scaled Score.

There will inevitably be some kinks the first time this is done at this scale in Virginia. They will be worked out over time. CAT lends itself to matrix sampling, a significant advantage.

It will be interesting to see both the raw pass/fail rates and the assessments of such things as:

- any net effects on scores compared to past tests, both on the group and individual levels; and

- any substantive differences between CAT and paper tests.

But it seems right.

Leave a Reply

You must be logged in to post a comment.