This is the seventh in a series about Virginia’s Standards of Learning educational assessments.by Matt HurtWidespread shutdowns of Virginia’s public schools during the COVID-19 epidemic last year resulted in a significant loss of instructional hours. To make up for lost ground, teachers need to spend as much time as possible with their students. Unfortunately, a new measure enacted with the best of intentions will take students and teachers out of the classroom for two more rounds of assessments, one in the fall and one in the winter.While the extra tests might be suitable for some school districts, local officials should be allowed to decide whether the assessments best meet the needs of their students.

This is the seventh in a series about Virginia’s Standards of Learning educational assessments.by Matt HurtWidespread shutdowns of Virginia’s public schools during the COVID-19 epidemic last year resulted in a significant loss of instructional hours. To make up for lost ground, teachers need to spend as much time as possible with their students. Unfortunately, a new measure enacted with the best of intentions will take students and teachers out of the classroom for two more rounds of assessments, one in the fall and one in the winter.While the extra tests might be suitable for some school districts, local officials should be allowed to decide whether the assessments best meet the needs of their students.

In 2017 the Virginia Board of Education implemented new criteria for the Standards of Accreditation, which determine if schools are performing up to snuff. Students who failed their SOL tests but demonstrated sufficient “growth” from the previous year counted the same for accreditation purposes as a student who passed his or her SOLs in the accreditation calculation. Under the growth system, students who failed a 3rd grade SOL test would be given four years to catch up and reach proficiency by the 7th grade — as long as they demonstrated progress toward that goal each year.

Given that the original point of the SOLs was to ensure that kids were on track to graduate from high school, giving them time to catch up was a wonderful thing. It is not realistic to expect every child to be successful out of the gate. The old system of basing accreditation on pass rates only incentivized schools to focus on bubble kids (those who were close to passing in previous years) and less on the needier kids. The shift in focus to “growth” encouraged schools to work with all kids.

During the 2021 General Assembly legislative session, HB2027/SB1357 introduced as through-year “growth” assessment program. The idea was to assess students in the fall and winter in grades 3 through 8 in reading and math to use as baseline. The spring SOL data would serve as the post-test to determine proficiency as well as growth from the previous fall. Legislators hoped that this “growth” measure, which replaces the spring-to-spring growth methodology used in 2018 and 2019, also would provide teachers with a high-quality assessment to help them in their instruction.

There are four major problems with the new through-year “growth” scheme, however. First, the stated objectives of testing for formative purposes and setting a baseline measure of growth are in conflict. Second, the extra assessments rob teachers of critical instructional time they need more than ever. Third, the data has proven too unreliable to use in many cases for guiding instruction. Fourth, a student potentially could demonstrate growth every year and never demonstrate proficiency.

Problem #1. When teachers administer a formative assessment, it is important for students to try their best to “show what they know.” Teachers use the data to remediate students who demonstrate need. When a pre-test measuring baseline performance is used in conjunction with a post-test measuring growth, there is no incentive for teachers to encourage students to try their best. Students who “sandbag” in the fall will show the most growth by the spring. This is problematic because there is no metric for measuring “sandbagging,” nor even a means by which to detect it. That’s not to say that teachers actively work to get their students to put forth a poor effort. There’s just no incentive for them to encourage them to try their best on the fall tests.

Problem #2. The new legislation requires that all of the assessments (fall growth, winter growth, and spring SOL) to be administered within 150% of the time it takes to administer the traditional SOL test. While the standard can be met with respect to students’ seat time taking the tests, the procedure cuts into teacher’s time. Security training, extra test sessions to meet the accommodations of students with disabilities, extra test sessions to assess students who had been quarantined due to COVID exposure or diagnosis, and altered schedules to ensure sufficient staff for test administration and proctoring are just a few of the considerations that impact teachers’ time. This fall, district officials reported that administration of the fall “growth” assessments disrupted instruction within their schools anywhere from three to twenty one days.

Problem #3. Data collected from the fall through-year “growth” assessments have proven unreliable. Comparing spring results to spring results covers the full yearly cycle. Comparing fall results to spring results yields different results because it does not take into account the inevitable “fade” effect over the summer. Thus, fall-to-spring comparisons tend to look better than spring-to-spring comparisons.

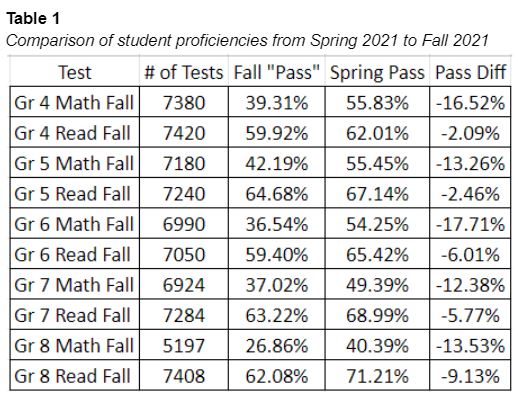

Table 1 summarizes results for approximately 35,000 students who took a spring 2021 SOL test and also took the corresponding fall 2021 through-year “growth” assessment. Students generally proved less proficient on the same content in the fall than in the spring. A few schools’ and divisions’ fall results aligned with their spring results, but most did not.

There are a few caveats to consider when evaluating this data.

- Due to the time constraints imposed by the state code which mandates these tests described in Problem #2 above, the fall tests are shorter and assess fewer skills than do the SOL tests. For example, the Math 5 fall test, which measured skills from Math 4 SOLs, contained 31% fewer questions and assessed 16% fewer standards than the Math 4 SOL test.

- There was more time, energy, money, and personnel allocated to summer learning in 2021 than in any other time in known history. More students participated in summer school and the pupil teacher ratios were much lower than previous years. It is unreasonable to expect that these efforts would not demonstrate some improvements in outcomes by the fall.

- Given the incentive structures outlined in Problem #1 above, it is quite likely that students were not encouraged to do their best on the fall tests like they traditionally are on the SOL tests. Every year, teachers and schools offer students incentives for good effort on their SOL tests, such as pizza parties, etc. During the administration of the through-year “growth” assessment this fall, not one pizza party was offered to incentivize student effort.

Problem #4. Given the concerns about the data, it is possible that more students will demonstrate growth by the spring of 2022 than warranted. If the fall data was skewed negatively (as was demonstrated in Table 1), and the administration protocols and incentives associated with spring SOL data remains consistent with previous years, as is expected, then many more students will demonstrate “growth” from fall 2021 to spring 2022 than from spring 2021 to spring 2022. As the Virginia Department of Education can be counted on to use the more beneficial statistic for school accreditation, many students likely will demonstrate “growth” from fall to spring each year yet never reach proficiency.Proposed Solution.

Many variables impact student achievement around the state. Why should school districts be compelled to adopt a one-size-fits-all solution that disrupts so many days in our school? Why not allow divisions to choose whether or not through-year “growth” assessments are worth the chaos and loss of instructional time? We already have a growth measure in place (the spring-to-spring growth method) that has proven beneficial for students and schools. Why not allow schools to choose to use them?

As was demonstrated in the piece Outperforming the Educational Outcome Trends- Virginia’s Region VII, some schools and divisions have made significant improvements in student outcomes in recent years. Region VII (and other divisions in the Comprehensive Instructional Program consortium) have used common benchmarks for years to monitor progress and obtain formative data to drive instruction. These assessments have proven reliable to the point that on average, a school’s SOL pass rate can be predicted based on benchmark data within plus/minus one point of accuracy. This school year, some divisions have elected to suspend administering these assessments in order to try to give back instructional time to teachers that was stolen by the through-year “growth” assessments.

In conclusion, the General Assembly would better serve the school children of Virginia by doing the following.

- Determine what is working, and then not intervene in those successes. In other words, “if it ain’t broke, don’t fix it”.

- Do not expect that less instructional time will yield better student outcomes, at least in real terms. You can’t fatten a pig by continuously weighing it. There are sufficient formative and summative assessments in every division, and more assessments serve as a distraction.

- Do not expect that “pre-test” data will serve as a reliable student-growth measure. The incentives in place do not support accurate data collection in the fall. Do expect that such a measure can demonstrate “growth” that doesn’t necessarily yield proficiency over time.

- Determine if we really wish to seriously attack the real problem of subgroup gaps or if we wish to whitewash the problem with a flawed through-year “growth” methodology.

Matt Hurt is executive director of the Comprehensive Instructional Program based in Wise County.

Leave a Reply

You must be logged in to post a comment.